Our Approach

Score Decomposition

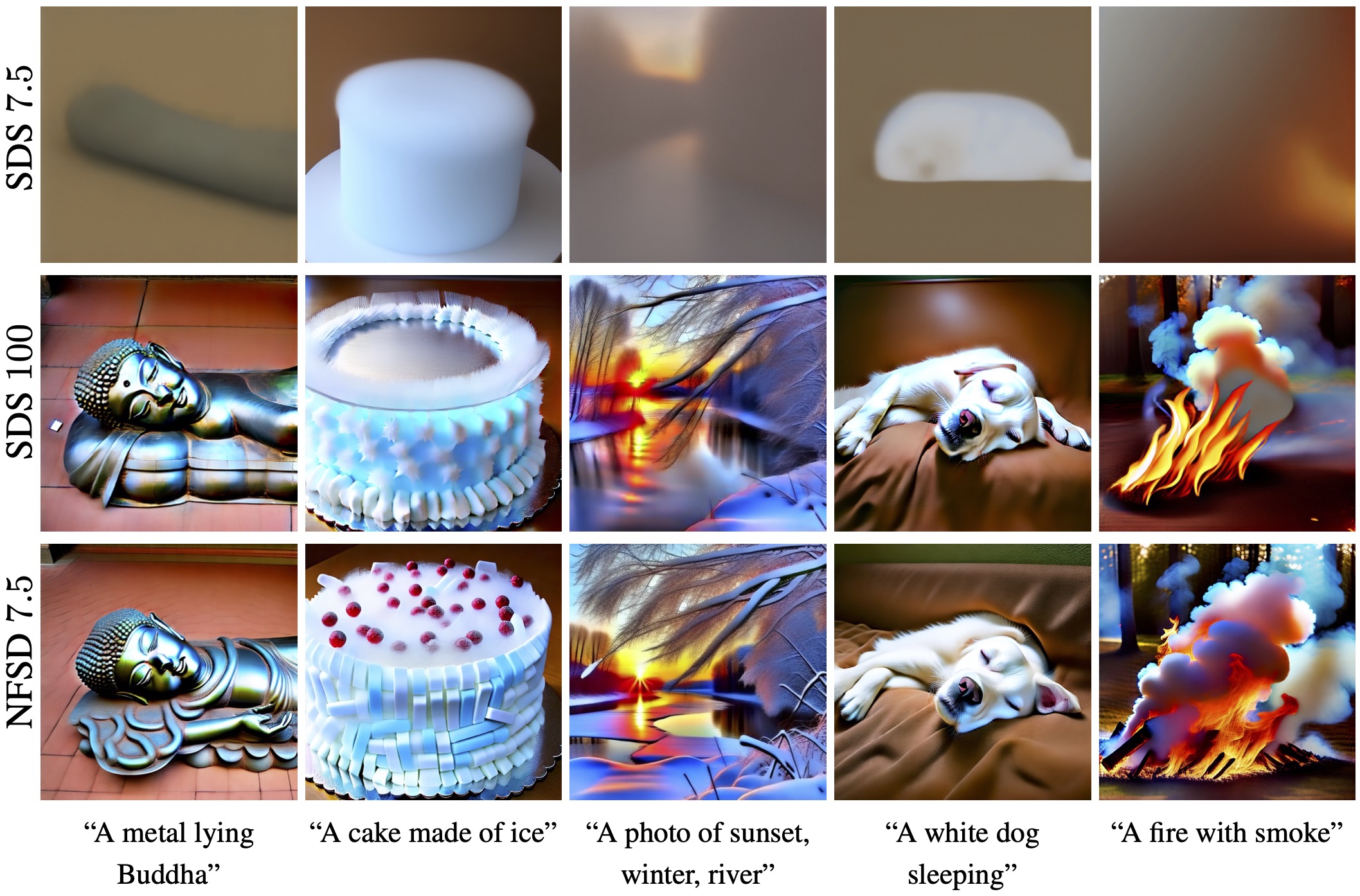

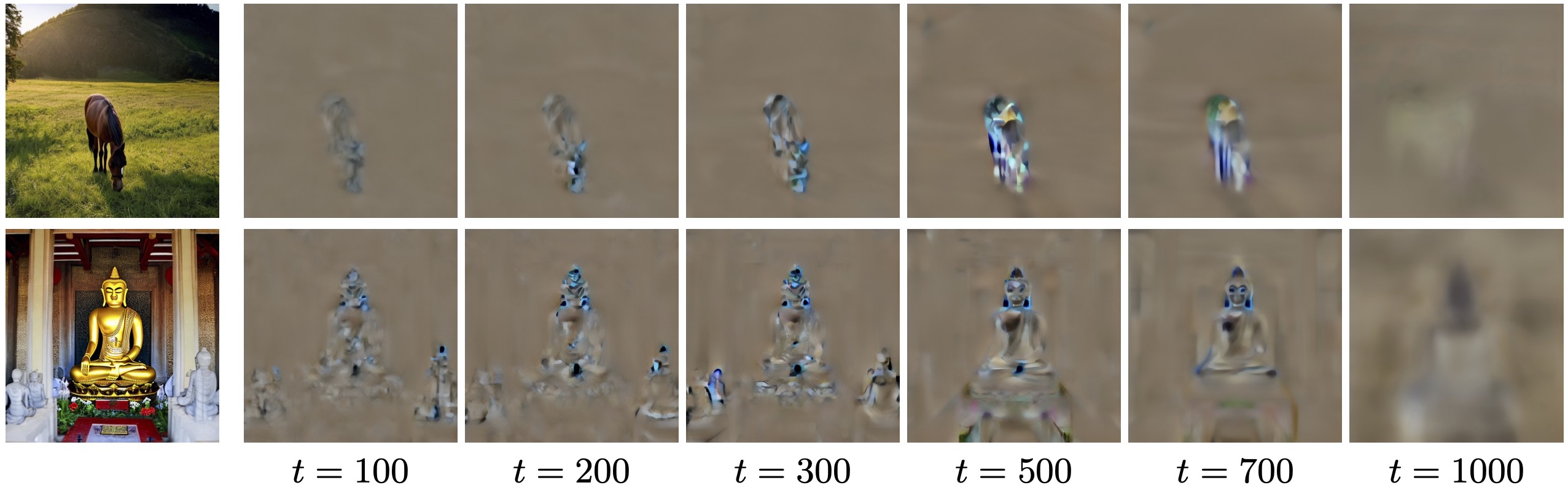

condition direction. The difference \(\dirc = \epred - \epuncond\) may be thought of as the direction that steers the generated image towards alignment with the condition \(y\). The condition direction \(\delta_c\) (demonstrated below) is empirically observed to be aligned with the condition and uncorrelated with the added noise \(\epsilon\).

noise and in-domain directions. Rewriting the CFG score using the condition direction \(\dirc\) defined above, we obtain: $$ \epredcfg = \epuncond + s(\epred - \epuncond) = \epuncond + s\dirc. $$

The unconditional term \(\epuncond\) is expected to predict the noise \(\epsilon\) that was added to an image \(\x \sim p_{\text{data}}\) to produce \(\z_t\). However, in SDS, \(\z_t\) is obtained by adding noise to an out-of-distribution (OOD) rendered image \(\x = g(\theta)\), which is not sampled from \(p_{\text{data}}\). Thus, we can think of \(\epuncond\) as a combination of two components, \(\epuncond = \dirr + \dirn\).

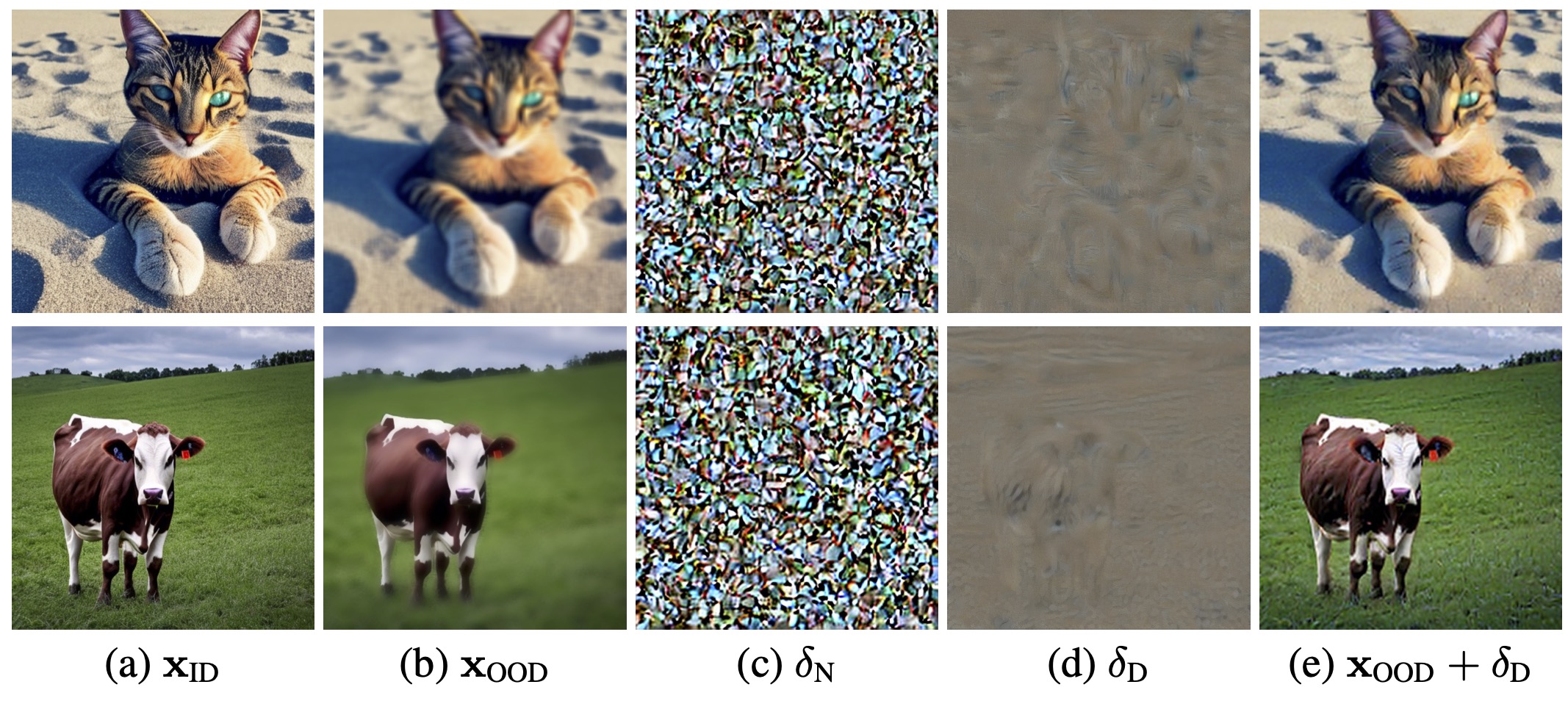

We attempt to visualize the two components by examining the difference between two unconditional predictions \(\epsilon_\phi(\z_{t}(\x_{\textrm{ID}}); \varnothing,t)\) and \(\epsilon_\phi(\z_{t}(\x_{\textrm{OOD}}); \varnothing,t)\), where \(\z_{t}(\x_{\textrm{ID}})\) and \(\z_{t}(\x_{\textrm{OOD}})\) are noised in-domain and out-of-domain images, respectively, that depict the same content and are added the same noise \(\epsilon\). Intuitively, while \(\epsilon_\phi(\z_{t}(\x_{\textrm{OOD}}); \varnothing,t)\) both removes noise (\(\dirn\)) and steers the sample towards the model's domain (\(\dirr\)), the prediction \(\epsilon_\phi(\z_{t}(\x_{\textrm{ID}}))\) mostly just removes noise (\(\dirn\)), since the image is already in-domain. Thus, the difference between these predictions enables the sepeartion of \(\dirn\) and \(\dirr\).

As can be seen, \(\dirn\) indeed appears to consist of noise uncorrelated with the image content, while \(\dirr\) is large in areas where the distortion is most pronounced and adding \(\dirr\) to \(\x_{\textrm{OOD}}\) effectively enhances the realism of the image (column (e)).

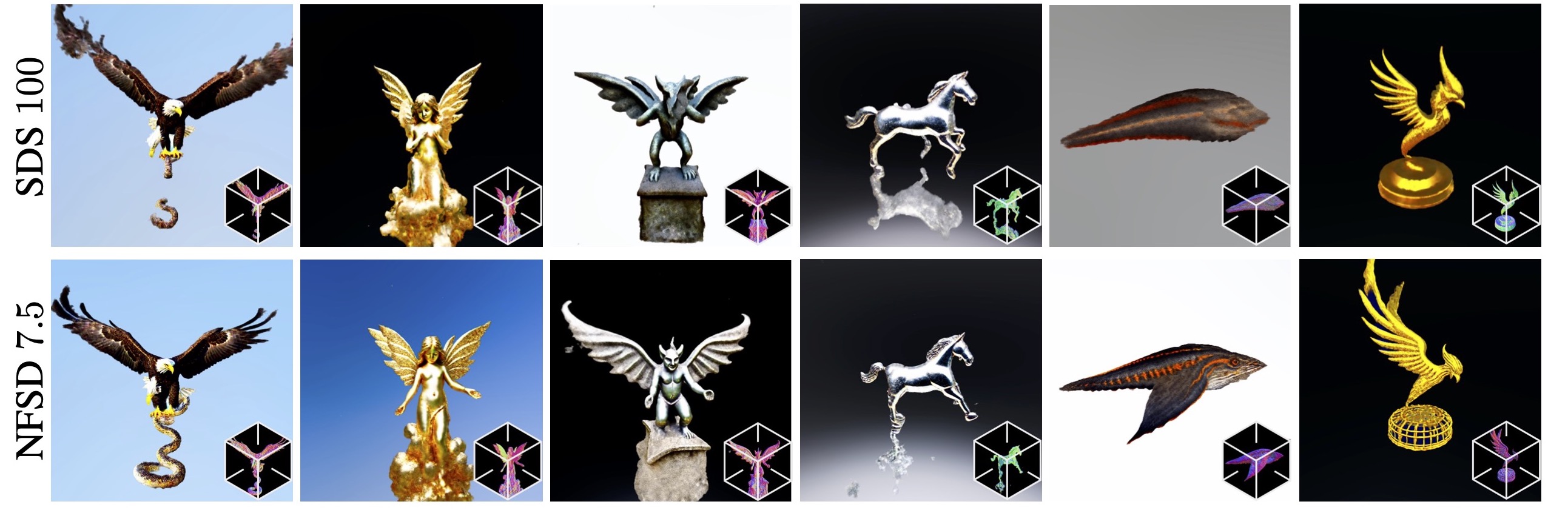

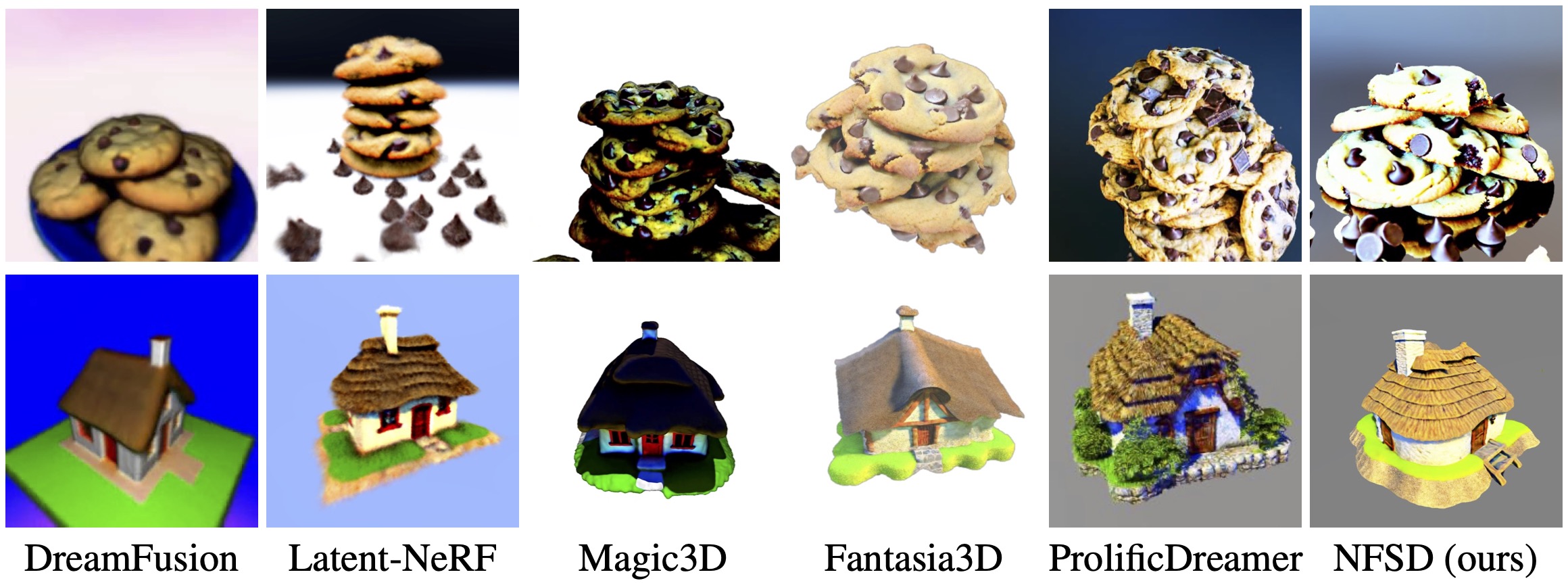

Noise Free Score Distillation

As discussed above, ideally only the \(s\dirc\) and the \(\dirr\) terms should be used to guide the optimization of the parameters \(\theta\).

To extract \(\dirr\), we distinguish between different stages in the backward (denoising) diffusion process.

For sufficiently small timestep values \(t < 200\), \(\dirn\) is rather small, and the score \(\epuncond = \dirn + \dirr\) is increasingly dominated by \(\dirr\).

As for the larger timestep values, \(t \geq 200\), we propose to approximate \(\dirr\) by the difference \(\epuncond - \epneg\), where \(\pneg =\) ``unrealistic, blurry, low quality, out of focus, ugly, low contrast, dull, dark, low-resolution, gloomy''. Here, we are making the assumption that \(\delta_{C=\pneg} \approx -\dirr\), and thus $$ \epuncond - \epneg = \dirr + \dirn - (\dirr + \dirn + \delta_{C = \pneg}) \approx \dirr. $$

To conclude, we approximate \(\dirr\) by $$ \dirr = \begin{cases} \epuncond , & \text{if } t < 200 \\ \epuncond - \unet(\z_t; y=\pneg,t), & \text{otherwise,} \end{cases} $$ and use the resulting \(\dirc\) and \(\dirr\) to define an alternative, \(\textit{noise-free score distillation}\) loss \(\Loss_\text{NFSD}\), whose gradients are used to optimize the parameters \(\theta\), instead of \(\grad{\theta}\Loss_\text{SDS}\): $$ \grad{\theta} \Loss_\text{NFSD} = w(t) \left(\dirr + s\dirc \right) \frac{\partial \x}{\partial \theta}. $$